Vol. 9 No. 4, July 2004

| Vol. 9 No. 4, July 2004 | ||||

Brinley Franklin

|

Terry Plum

|

This paper examines the methodology and results from Web-based surveys of more than 15,000 networked electronic services users in the United States between July 1998 and June 2003 at four academic health sciences libraries and two large main campus libraries serving a variety of disciplines. A statistically valid methodology for administering simultaneous Web-based and print-based surveys using the random moments sampling technique is discussed and implemented. Results from the Web-based surveys showed that at the four academic health sciences libraries, there were approximately four remote networked electronic services users for each in-house user. This ratio was even higher for faculty, staff, and research fellows at the academic health sciences libraries, where more than five remote users for each in-house user were recorded. At the two main libraries, there were approximately 1.3 remote users for each in-house user of electronic information. Sponsored research (grant funded research) accounted for approximately 32% of the networked electronic services activity at the health sciences libraries and 16% at the main campus libraries. Sponsored researchers at the health sciences libraries appeared to use networked electronic services most intensively from on-campus, but not from in the library. The purpose of use for networked electronic resources by patrons within the library is different from the purpose of use of those resources by patrons using the resources remotely. The implications of these results on how librarians reach decisions about networked electronic resources and services are discussed.

This study is a contribution to the analysis of how library usage is changing as a result of the advent of networked electronic services. It also suggests a methodology for collecting reliable information about the implications of networked electronic services usage patterns in the electronic information environment, which library directors and others can use for strategic planning and assessment purposes. The question this study examines is: 'What can we know about the usage and users of networked electronic resources in U.S. medical and academic libraries, especially those who do not come into the library?'

The digital information environment has dramatically changed the way that faculty and students access information offered by academic libraries. Several digital information usage studies in recent years have focused on standardized usage counts. These include: the COUNTER Project ; the Association of Research Libraries' E-metrics Project; the ICOLC Guidelines for Statistical Measures of Usage of Web-Based Indexed, Abstracted, and Full-Text Resources; the European Union's Library Performance Measurement and Quality Management System - EQUINOX Project ; ISO 2789:2003 Information and Documentation International Library Statistics and ISO 11620:Amd 1:2003 Information and Documentation Library Performance Indicators and NISO Standards; CENDI Projects Metrics and Evaluation ; and others. Studies have also been developed that include electronic resources in assessment measures of library performance (e.g., LibQUAL+™ ).¹

User surveys that actually query networked services users in real-time as they access electronic materials are less common. This lack is puzzling, insofar as user surveys in traditional (i.e., non-digital) library environments are well documented (Covey 2002).

This paper examines the methodology and results from Web-based surveys of networked electronic services² usage at academic libraries in the United States between July 1998 and June 2003. More than 18,000 library users were surveyed as they accessed networked electronic services at five academic health sciences libraries and two large main campus libraries serving a variety of disciplines.

This investigation builds on an ongoing research agenda by the authors (Franklin, 2001; Franklin and Plum, 2002) and addresses a number of issues that demonstrate how library users are acting in the new digital environments. Specifically, it addresses the following research questions:

In addition to ongoing projects, the measurement of networked electronic resources has created a large, current literature. Helpful reviews of this literature include Bertot (2001); Bertot, et al. (2002); Breeding (2002); Luthor ( 2000); and McClure and Bertot (2001). Patterns of database use in academic libraries, particularly by time of month, day of the week, and time have been reported by Tenopir and Read (2000). Web surveys are not given a prominent place in this literature nor in projects exploring the measurement of networked electronic resources, possibly because the point of the projects is to collect data on the use and value of electronic resources.

Most Web surveys are non-probability based, do not represent a random sample of the users, and have significant sampling bias introduced by non-respondents. A very useful summary of Web-based survey considerations by Gunn (2002) identifies many of the issues associated with Web-based surveys. She summarizes the probability and non-probability based, Web-based survey categories originally proposed by Couper (2000). The non-probability categories of Web surveys include polls as entertainment, unrestricted self-selected surveys, and volunteer opt-in panels.

Probabilistic Web surveys include intercept surveys (every nth respondent is surveyed), list-based samples (usually solicited through an e-mail list sample), mixed mode surveys (where the Web is only one tool), and pre-recruited panels of Internet users. Couper in a later article (2001) discusses sources of measurement error in Web-based surveys, many of which are addressed by the authors' sampling plan, and are discussed below. Dillman and Bowker (2001) and Dillman et al., (1998) propose fourteen principles for the design of Web surveys to mitigate the traditional sources of survey error: sampling, coverage, measurement and nonresponse. These principles were followed, as appropriate, in the authors' Web-based survey design.

Part of the following analysis compares the results of Web-based surveys to the results of print-based surveys. However, if patrons answer the print surveys differently than the Web-based surveys, then such comparisons are unjustified. A discussion by Baron and Siepman (1999) would indicate that there are few differences, conclusions supported by Lee, et al., (2002). Perkins and Yuan (2001) describe a library satisfaction survey with identical content for library Web and in-person patrons to compare the responses. The authors, in Table 5 of this paper, felt justified in drawing conclusions by associating print and Web-based surveys, both administered according to a similar random sampling plan. In fact, high correlations existed between electronic services use as reported by the Web based and print survey form when administered concurrently.

Other Web-based surveys do not measure usage at the point of use. Lazar and Preece ( 2002) discuss the implementation of Web-based forms to evaluate networked electronic resources, but their design has substantial differences from the methodology presented here. Most other Web-based surveys are user surveys focusing on perceptions of service quality (Covey, 2002).

What is a statistically valid methodology for capturing electronic services usage both in the library and remotely through Web surveys? Are particular network configurations more conducive to studies of digital libraries patron use?

In 1990, Dayton and Scheers reviewed library usage patterns at nine academic libraries, representing more than 17,500 library uses at a variety of types of libraries. The two statisticians determined that, when trying to estimate total sponsored research use as a percentage of total library use, the three largest contributors to the prediction equations were the sample size, the ratio of sponsored research use to total use, and the coefficient of variation for research use. These three variables accounted for 96% of the variance in the standard error in the actual library usage data examined at the nine libraries studied.

In practice, sample size can be fairly accurately predicted if the investigator can anticipate a reasonable estimate for the mean and standard deviation of sponsored research use. More recently, a third statistician, Uwe Koehn, reviewed electronic services usage data provided by the authors from three health sciences libraries and two main libraries. He reported that, in the electronic environment, the sample size (n) required for accuracy A, is n=3D1/A². Koehn (Personal communication) recommends stratifying among the various times of the year (academic sessions, summer sessions, inter-sessions). Ideally, the size of the sample drawn during each segment of the academic year would be in the same proportion as the fraction of electronic services use during that academic cycle to the total electronic services usage over the course of the year.

Once an appropriate sample size was determined, the authors' sampling plan was based on the random moments sampling technique. This plan is a probability based, Web-based user survey, but differs from the four categories of probability-based surveys identified by Couper (2000). The plan addresses the problem of coverage error, or the mismatch between the target and frame populations. Among the target population Internet access can be safely assumed. Even if this is not the case, the frame is comprised of those patrons who access networked electronic resources and, therefore, matches the frame with the population. Although large numbers of respondents were obtained, we do not assume that large numbers of respondents are somehow more valid. Statistical inference is only possible with probability-based sampling designs, which we believe this sampling plan represents.

To address non-respondent bias, the author's Web-based questions are mandatory. Although incessant demands for registration, e-mail addresses, and identification information throughout the Web have encouraged Internet users to ignore surveys or if required, to falsify information, this Web-based survey does not ask for the type of identifying information that would raise alarms on the part of the patrons. Because the survey carries the responsible legitimacy and authority of the respondent's library, a greater likelihood of authentic answers is expected than on registration pages to any number of Internet sites.

Measurement error, or the deviation of the answers from their true values, is a real concern in this survey. The survey investigates purpose of use of networked electronic resources, distinguishing sponsored or grant-funded research from instruction. Respondents may not fully understand the language required by OMB Circular A-21 of the U.S. Office of Management and Budget to define sponsored research and the other purpose of use categories. Misinterpretation or measurement error is reduced by requiring an account number for the grant, or by matching purpose of use with category of user (for example, an undergraduate student is unlikely to be engaged in sponsored research). For some of the later surveys, a grant account number was requested if the sponsored research category was selected.

The surveys were pre-tested in each local situation, and content validity was increased through several meetings with local librarians and Information Technology staff who are familiar with the environment and the population of the university or the medical centre. The surveys were viewed under several browsers for consistency, and are comprised of short, text-based questions, to minimize error from differences in appearance. (Couper, 2001). The first and second usage questions (relating to sponsored research and instruction) were rotated on the print survey and on some of the Web-based surveys.

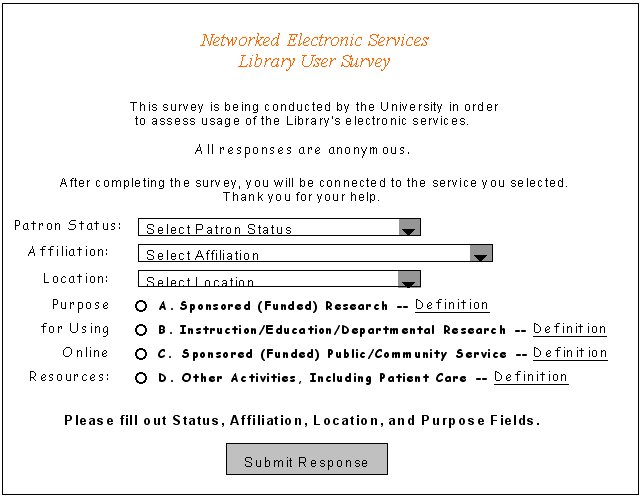

Web-based users surveys were conducted over the course of a year for each institution. The Web-based survey form (see Figure 1) was activated during survey periods as users accessed one of the library's networked electronic services. The survey form typically determined users' status (e.g., undergraduate student, graduate/professional student, faculty/staff, or other user), affiliation (e.g., school of medicine, school of law, college of arts and sciences, etc.), location (e.g., in the library or outside the library), and purpose of use (e.g., sponsored research, instruction, patient care, all other activities).

At least twenty-four hours of surveying took place at each of the seven libraries included in this study. The technical approach to surveying networked services usage was influenced by each library's local networked services environment. The most effective means of conducting the Web-based survey occurred in libraries where all access to networked electronic services passed through a gateway that authenticated users as they connected to secure electronic information.

Shim and McClure noted in their E-Metrics study of academiclibraries that four libraries had instituted a click-through mechanism to count attempted log-ons to electronic databases and e-journals from the electronic resources pages. This mechanism tracked the number of times the page is accessed and the destination (databases):

The mechanism works only for database access through library Web pages. If the user bypasses the library Web site (e.g., typing the database vendor's Website directly or through stored bookmark), that access cannot be captured. The big advantage of the click-through mechanism is that uniform usage data can be collected by the library as opposed to obtaining inconsistent usage data from different vendors. (Shim and McClure, 2002)

Libraries that construct gateways to networked electronic resources can have consistent data, but can also run surveys that are inferentially sound. Gateways could be constructed of a variety of database-to-Web solutions or proxy re-writers. A useful discussion of database driven Web sites is found in Antelman ( 2002) and also in Breeding (2002). Open source PHP/MySQL, Zope/PostgreSQL, perl pass-through scripts, ColdFusion, Microsoft ASP, federated searching through the ILS, MyLibrary personalization structures, or rewriting proxy services such as EZProxy all carry gateway attributes, and make it less likely that patrons can find other paths to the networked electronic resources than those controlled by the library. Libraries with flat HTML pages, the links of which could be copied to bookmarks, departmental Web pages, personal pages, subject bibliography or quick shortcut pages, the 856 field of MARC records, or other avenues were much less likely to be able to control access (or collect commensurable data) in order to insert the Web-based survey at the prescribed times.

One of the strengths of this survey technique is that it is based upon actual use, not on predicted, intended, or remembered use. The respondent must choose the resource in order to be presented with the survey, therefore memory or impression management errors are avoided. Once the survey is completed, the respondent's browser is forwarded to the desired networked electronic resource. This approach is consistent with the random moments sampling technique. Each survey period is two hours, so each survey period in itself is only a snap-shot or picture of usage. Because the survey periods are randomly chosen over the course of a year and result in at least twenty-four hours of surveying, the total of the survey periods represents a random sample, and inferences about the population are valid. Users are presented with the survey as they select the desired networked electronic resource or service. This approach assumes that users will answer the survey, especially the purpose of use, in the contexts of their specific needs for the particular resource they have chosen. The purpose of use is distinct from the user. A researcher preparing for a class would answer differently than a researcher using library resources for a grant-funded project. The survey data do not uniquely and consistently identify the user. The user data are anonymous. These differences cannot be tracked by user in the survey data. However, there is some anecdotal evidence which indicates that researchers can and do make these distinctions.

Because the survey does not ask for impressions, reflections or self-reported patterns by the user, the survey does not indicate, for example, whether a researcher typically uses the library's networked electronic resources from outside of the library, but only whether during the sampled period the researcher was outside of the library when using a specific networked electronic resource. Data based on actual use are more reliable than data based upon remembered use or user-determined and user-reported patterns of use.

Libraries that do not have gateways are unable to create referral pages for every electronic resource, and so tend to insert the survey at the point of the list of databases, e-books or e-journals rather than at the point of use of the specific electronic resource. It is reasonable to expect that such libraries would lose respondents (have non-respondents) from the sample because they had found other ways to the networked electronic resource and would not see the form. For these libraries, the results are less valid, have more error due to non-response, and are not as reliable.

The authors have not yet evaluated score reliability in the manner discussed for LibQUAL+ ™ by Thompson, et al., (2002), but as more data are collected, the reliability of the scores could be measured. However, if a gateway is present, the authors expect the methodology to be reliable and replicable.

Several interesting local negotiations occurred during the course of this study. In one library there was considerable concern over the annoyance factor of filling out the survey repeatedly, if the respondent was using a series of databases. However, this class of patron, the heavy user, may be more likely to be a grant-funded researcher, whose usage is important to track. The compromise reached in this library was the following: if the respondent chose a second and subsequent networked electronic resources, the selections from the previous choice were defaulted into the new form, except for the purpose of use. The purpose of use might in fact be different. This solution is elegant in that it collects all of the users and usages during the sample period, yet demonstrates to the respondent that the inconvenience of filling out the form repeatedly during the sample period is understood and has been mitigated.

One of the questions refers to the location of the respondents. These locations are confirmed, on a sample basis, by comparing reported location on the survey to IP addresses associated with the results file, thus providing some internal validity to the question.

How extensively do sponsored researchers use the new digital information environment? Are researchers more likely to use networked electronic resources in the library or from outside the library?

In this study, sponsored research accounted for approximately 34% of the networked electronic services activity at the health sciences libraries (see Table 1) and 16% at the main campus libraries (see Table 2). Sponsored researchers at the health sciences libraries used networked electronic services most intensively from on-campus, but not from in the library.

At the four most recently surveyed academic health sciences libraries, conducted between 2001 and 2003, sponsored research use represented almost one-third (33.71%) of all electronic services users. More than 86% (1833 of 2120) sponsored research uses of networked electronic resources occurred outside the library. While 92% (1953 of 2120) sponsored research uses occurred on campus, very little (287 of 2120 or 13.5%) actually took place in the libraries.

| Sponsored (funded) research | Instruction, education and non-funded research | Patient care | Other activities | Total | |

|---|---|---|---|---|---|

| In the Library | |||||

| Library 1 | 19 | 67 | 12 | 33 | 131 |

| Library 2 | 88 | 332 | 67 | 100 | 587 |

| Library 3 | 168 | 234 | 53 | 346 | 801 |

| Library 4 | 12 | 94 | 17 | 29 | 152 |

| Subtotal, in the library | 287 | 727 | 149 | 508 | 1671 |

| Percent of sub-total | 17.2% | 43.5% | 8.9% | 30.4% | 100% |

| Percent of over-all total | 4.56% | 11.56% | 2.37% | 8.08% | 26.57% |

| On-campus, not in the library | |||||

| Library 1 | 261 | 167 | 19 | 17 | 464 |

| Library 2 | 688 | 593 | 328 | 135 | 1744 |

| Library 3 | 480 | 366 | 114 | 13 | 1035 |

| Library 4 | 237 | 169 | 94 | 13 | 513 |

| Subtotal, on-campus, not in library | 1666 | 1295 | 555 | 178 | 3695 |

| Percent of sub-total | 45.1% | 35% | 15% | 4.8% | 100% |

| Percent of over-all total | 26.49% | 20.59% | 8.82% | 2.83% | 58.75% |

| Off Campus | |||||

| Library 1 | 44 | 88 | 24 | 39 | 195 |

| Library 2 | 58 | 148 | 71 | 42 | 319 |

| Library 3 | 25 | 70 | 39 | 114 | 248 |

| Library 4 | 40 | 85 | 24 | 13 | 162 |

| Subtotal, Off-Campus | 167 | 391 | 158 | 208 | 924 |

| Percent of sub-total | 18.1% | 42.3% | 17.1% | 22.5% | 100% |

| Percent of over-all total | 2.66% | 6.22% | 2.51% | 3.31% | 14.69% |

| Total electronic services use | 2120 | 2413 | 862 | 894 | 6290 |

| Percent of total use | 33.71% | 38.37% | 13.71% | 14.22% | 100% |

At the two main campus libraries, surveyed in 2001, sponsored research use represented 16.1% of total electronic services use. More than 73% (739 of 1004) sponsored research uses of networked electronic resources occurred outside the library, while only 56% of all electronic services use took place outside the library (Table 2).

However, the differences in distribution between these two libraries is large. For example, in the first main campus library Funded Research in the library accounted for only 3.3% of the in-library total, whereas it accounts for 12.5% of the second library. Also, note that for the first library the outside use is less than the inside use, which is not consistent with usage patterns in any of the other libraries surveyed by the authors. The first library did not have a gateway, but relied upon placing the survey at the point of various lists of resources from the library home page. It is suspected that the data are less reliable.

Yet, the data are instructive. It may well be that inside the library on public workstations, patrons tend to access the networked electronic resources through library-provided menus. The surveys would be placed at these menus. Outside the library, it may be that patrons use bookmarks, departmental Web pages, or other routes to the networked electronic resources, and so were not intercepted by the survey. The unusually low numbers for a large university library support this speculation. The data also confirm the need for a gateway in order to collect a true data sample.

At all seven surveyed libraries combined, there were 12,948 remote electronic services uses and 5,682 electronic services uses in the libraries, or a ratio of more than two remote networked electronic services uses for each in-house use. At the five medical libraries, there were 3.4 electronic services uses (9579) for each in-house use (2482). At the two main libraries, there 1.2 uses (3369) outside the library for each in-house use (2840), although if only the second library is considered, there were 1.6 uses (3067) for each in-house use (1906)

| Sponsored (funded) research | Instruction, education and non-funded research | Other Activities | Total | |

|---|---|---|---|---|

| In the library | ||||

| Main Library 1 | 27 | 656 | 136 | 819 |

| Main Library 2 | 238 | 1178 | 490 | 1906 |

| Subtotal, in the library | 265 | 1834 | 626 | 2725 |

| Percent of sub-total | 21.1% | 60.1% | 18.8% | 100% |

| Percent of over-all total | 4.25% | 29.41% | 10.04% | 43.70% |

| Total electronic services use | 1004 | 3945 | 1286 | 6235 |

| Percent of electronic services use | 16.10% | 63.27% | 20.63% | 100.0% |

Are there differences in the category of user of electronic information based on the user's location (e.g., in the library; on-campus, but not in the library, or off-campus)?

Table 3 reports demographic differences by location. For example, there were 1,283 faculty, staff, and research fellows who used a networked electronic resource from within the library during the sampled periods. This value represents 45.1% of the in-library users, but only 10.33% of all library users.

There is considerable variation in remote versus in-house use based on the demographics of the user and the type of library. At the five medical libraries, there were 4.5 faculty, staff, and research fellow users (5783) for each in-house user (1283) recorded (See Table 3). From within the library faculty, staff, and research fellows were 45.1% of the sampled in-house users, whereas from outside the library, users in this category totaled 60.3% of remote users. Graduate students, interestingly, were roughly the same percentage of users both within and outside of the library: 31.7% of the in-house total, and 28.7% of the remote total. Not too surprisingly the 'All other users' category is a higher percentage of the total for in the library than for remote, 16.8% to 6.1%, representing walk-ins who do not fall into the primary client groups, who probably do not have access to the networked resources from outside the library. Although different in number, the distribution of users between in-Library and remote is not widely incongruent. Faculty, staff, and research fellows tend to use electronic resources to a great percentage from outside the library.

| Total | Percent of sub-total | Percent of over-all total | |

|---|---|---|---|

| In the library | |||

| Graduate students | 902 | 31.7% | 7.26% |

| Faculty, staff, research fellows | 1283 | 45.1% | 10.33% |

| Undergraduate students | 180 | 6.3% | 1.45% |

| All other users | 477 | 16.8% | 3.84% |

| Total users, in the library | 2842 | 100% | 22.88% |

| Outside the library | |||

| Graduate students | 2745 | 28.7% | 22.10% |

| Faculty, staff, research fellows | 5783 | 60.4% | 46.56% |

| Undergraduate students | 462 | 4.8% | 3.72% |

| All other users | 589 | 6.1% | 4.74% |

| Total users outside the library | 9579 | 100% | 77.12% |

| Total electronic services users surveyed | 12421 | 100.00% | |

At the two main libraries, there were almost two remote graduate student uses for each graduate student use in the library, but for all other categories, there were approximately the same number of in-library users as users outside the library.

Graduate students represent 22.4% of in library users, and 34.6% of outside the library users. Faculty, staff, and research fellows accounted for 22.4% of in library use, and 23.7% of outside the library users. Undergraduates tend to come into the library, representing 51.5% of in library users, and only 39.1% of outside users.

| Total | Percent of sub-total | Percent of over-all total | |

|---|---|---|---|

| In the library | |||

| Graduate students | 613 | 21.6% | 9.87% |

| Faculty, staff, research fellows | 637 | 22.4% | 10.26% |

| Undergraduate students | 1464 | 51.6% | 23.58% |

| All other users | 126 | 4.4% | 2.03% |

| Total users, in the library | 2840 | 100% | 45.74% |

| Outside the library | |||

| Graduate students | 1166 | 34.6% | 18.78% |

| Faculty, staff, research fellows | 797 | 23.7% | 12.84% |

| Undergraduate students | 1317 | 39.1% | 21.21% |

| All other users | 89 | 2.7% | 1.43% |

| Total users outside the library | 3369 | 100% | 54.26% |

| Total electronic services users surveyed | 6209 | 100.00% | |

How does purpose of use (e.g., sponsored research, instruction, patient care) differ between electronic services use and traditional library services (e.g., print collections, reference services, etc.)?

Purpose of use for electronic information at the medical libraries most resembled print journal usage, which was measured by a print questionnaire. Electronic information usage for sponsored research purposes was higher than print journal use related to sponsored research at all but one of the medical libraries. At all of the medical libraries, a larger percentage of electronic information usage was related to sponsored research than the combined total for all library services, including both traditional and electronic collections and services.

At the two main libraries, sponsored research use of electronic services was similar to overall sponsored research use of the libraries. At one of the two main libraries, sponsored research use of print journals as a percentage of all print journal use (13.73%) was significantly higher than sponsored research use of electronic services as a percentage of all electronic services use (see Table 5).

| Electronic services use | Print journal use | All library use | |

|---|---|---|---|

| Medical libraries | |||

| Library 1 | 41.00% | 18.53% | 14.94% |

| Library 2 | 31.47% | 27.03% | 22.07% |

| Library 3 | 31.75% | 34.39% | 22.93% |

| Library 4 | 29.79% | 24.19% | 17.88% |

| Average, medical libraries | 33.50% | 26.04% | 19.46% |

| Main libraries | |||

| Main Library 1 | 9.11% | 13.73% | 9.39% |

| Main Library 2 | 10.42% | 9.67% | 9.31% |

| Average, main libraries | 9.77% | 11.70% | 9.35% |

Interestingly, there is a high degree of correlation in medical libraries for sponsored research usage of print journals and all library use (.927). There is a much lower correlation between sponsored research usage of electronic services and either print journal usage (-.658) or all library usage (-.694). Although usage of electronic resources most resembled print journal usage, that correlation is relatively low. Patterns of usage of electronic resource in medical libraries are new and unique.

Remote users outnumbered in-house users of electronic information at all five medical libraries although the percentage of remote users varied from 51% to 84%. The findings were not as consistent at the two main libraries. At one main library, 61% of electronic information usage was from remote users; at the second main library, 65% of electronic information usage was from in-house users. However, the data collection for this second library is suspect, as has been discussed. The authors anticipate follow-up studies with previous participants and additional studies at new libraries will help to further characterize electronic information usage by remote and in-house users.

The fact that more literature in the medical sciences is available electronically may help to account for why medical library users, and especially faculty, staff, and fellows, choose to use electronic services remotely. They may find that virtually all of their information needs can now be addressed from outside the library. This may be a trend that will re-occur in other disciplines as more networked electronic resources become available in those disciplines.

The vast majority of networked services use occurs on-campus, either in the library or from other locations on-campus, although resources in the electronic information environment are available to authorized faculty and students anywhere they have access to a computer and a network connection. Interestingly the purpose of use (sponsored research, etc.,) varied far more dramatically in both medical and academic libraries when comparing in-library use to remote use, than did the categories of users (graduate student, etc.). The categories of users, to generalize, are similar in the library and outside of the library, but the purpose of use is very different. Use of networked electronic resources for sponsored research occurs outside of the library. Since it is unlikely that faculty would divide their time in such a way, or would come into the library for instructional purposes but not for research, one might speculate that the faculty who come into the library are different people from those who do not, despite the similar percentage distributions.

These data support the conclusion that patrons who log into networked electronic resources from outside the library are different from those who come into the library, a point that came up frequently in discussions with medical librarians. Yet, many libraries make service decisions based upon activity at service points, for example, the reference desk. These service point data are often extended inferentially to represent the library population, for example, in collection development decisions. Although the vendors supply usage statistics for their networked electronic resources, they do not distinguish between usage inside or outside the library. Librarians may incorrectly assume that the usage of networked electronic resources in the library resembles the usage outside of the library.

Further, many libraries are re-inventing their library as a place to attract grant-funded researchers and scientists into the library. It may be felt that the lack of researchers or grant-funded scientists physically present in the library is a result of dissatisfaction with or disinterest in library services. The data presented here support the notion that the library is indeed doing its job, and delivering resources electronically to its patrons, even though they do not come into the library. To reach funded researchers, the library should offer more electronic services in a virtual library, and not worry about their lack of attendance in the physical library.

This study focuses on the usage and users (both demographic and location) of electronic resources. It is easily replicable, given a suitable network topology, and those libraries who wish to collect similar data about their users are encouraged to exploit or implement a gateway topology in order to generate true probability samples. Once the appropriate technical pieces are in place—a gateway, pass through scripting, database-to-Web solutions, rewriting proxy server, or some similar arrangement—then it becomes technically easy to run similar surveys on any aspect of electronic services. Such information will never be available through Web transaction logs nor vendor-produced data.

In an overview of performance measures in higher education and libraries, Kyrillidou ( 1998) identified three issues that should be taken into account when assessing the reliability and validity of academic library data: consistency across institutions and time, ease versus utility in gathering data; and values, meanings, and measurement. The last issue refers to the interpretation of data as guided by local conditions. The methodology for Web surveys demonstrated in this study, given the appropriate networking topology, meets all three of these issues. It can be meaningfully applied with consistency across institutions and time. It is easy to implement and gathers useful data quickly. The data can be interpreted locally, and indeed, local questions can be asked to guide specific service decisions about networked electronic resources.

The authors are encouraged that the Web-based survey methodology employed at these six libraries is reliable as a model for similar user studies at other libraries. The methodology was adopted as part of the Association of Research Libraries New Measures programme in May, 2003 as the MINES project (Measuring the Impact of Networked Electronic Services). Participants in the MINES project will understand better the impact of electronic information offered through academic libraries on their institutions' research and instructional programmes.

¹ Some of these projects are summarized in an article by Shepherd and Davis (2002).

² For this research, our functional definitions for networked electronic services are similar to that found in the ARL E-Metrics Phase II Report.

The working definition of networked services is those electronic information resources and/or services that users access electronically via a computing network (1) from on-site in the library (2) remote to the library, but from a campus facility, or (3) remote from the library and the campus. Examples of networked resources include local, regional, and statewide library hosted or authored Web sites and library-licensed databases. Examples of networked services include: Text and numerical databases, electronic journals and books; e-mail, listservs, online reference assistance; Training in the use of these resources and services; Requests for services via online forms (i.e., interlibrary loans). (Shim et al., 2001: xi)

The definitions undergo a slight revision in The importance of network measures and statistics. We included locally licensed databases, regional or statewide consortia licensed databases, aggregated databases, and publishers databases, that is, online indexing and abstracting databases, fulltext journal article aggregators, e-journals, and e-books, offered by the sampled libraries. We excluded publicly available Web sources because we were interested in resources for which there was an assigned cost. The one exception was the PubMed, which many health science libraries fold into their access methods for subscription databases. For networked electronic services, we focused on the access to text and numerical databases, electronic journals, and electronic books, and on interlibrary loan and document delivery. The proposed methodology would work equally well for other categories of services from the definitions list, such as reference and information services, especially virtual reference, instruction, and institutional portals, but because of the circumstances of the sample libraries, we chose not to include them. For this paper, the term, 'networked electronic services', is used for the resources and services described above.

³ Sponsored or funded research is defined by the United States Office of Management and Budget in OMB Circular A-21. The definition of this and the other categories of purpose of use are given on both the Web-based survey and the print survey. Sponsored research includes research funded by grants or contracts from federal, state, or local governments; separately budgeted research projects funded by University money, or research training grants or contracts from a foundation or other outside party. The category includes only specially funded research projects, which are specifically budgeted and accounted for as organized research by the institution.

The authors are grateful for the willing, generous, and professional assistance of the following people in setting up, technically enabling, and administering the research studies described in this paper. Any mistakes, misrepresentations, or other errors are, of course, our own.

| Find other papers on this subject. |

|

| |

© the author, 2004. Last updated: 28 April, 2004 |